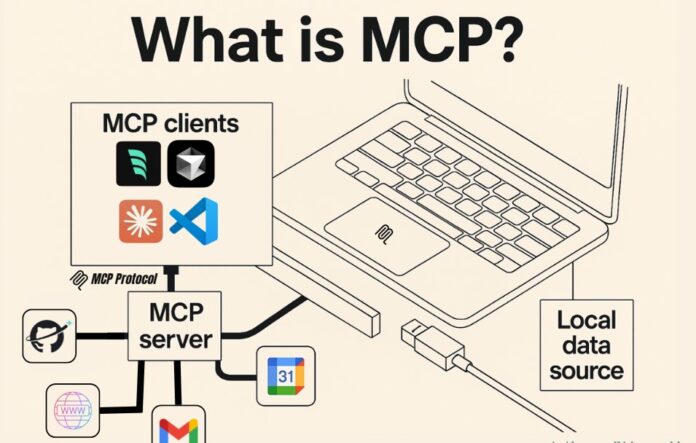

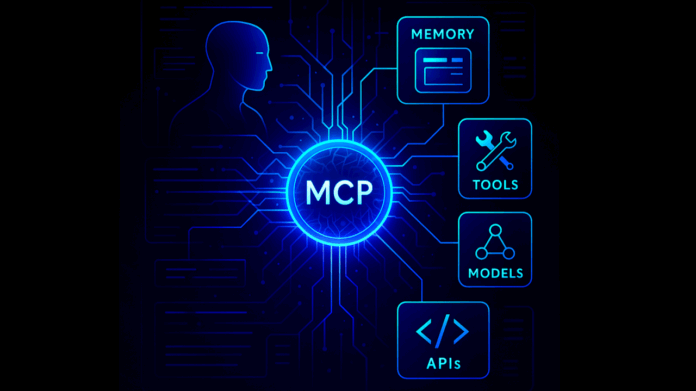

Model Context Protocol servers make large language models practical at work by brokering context, tools, and data in a standardized way. Instead of wiring each model to every database or API, an MCP layer exposes tools, memories, and docs as discoverable capabilities.

Teams gain a single control plane for access rules, observability, caching, and fallbacks. The result is faster prototyping, safer production, and clearer accountability between model providers and your systems.

This article shows how to compare options, evaluate real performance, and keep tabs on trends without getting lost in vendor claims.

What MCP servers do for real teams

An MCP layer centralizes everything a model needs to reason and act. That includes semantic search over internal docs, structured tool calls, auth to third-party services, and per-tenant policy. Good platforms also bring tracing for prompts, retries, and cost control, so you can test safely, then scale.

Practical advantages to expect:

- One contract for tools and data across multiple LLMs

- Consistent auth, rate limits, and governance

- Observability for latency, token spend, and errors

- Caching, deduped calls, and safe fallbacks

This foundation turns ad-hoc chat prototypes into reliable applications that product and security teams can trust.

Compare MCP servers wisely

Before demos impress you, standardize how you compare. Look for open protocols, clean tool schemas, and proof that the platform handles messy production realities like flaky APIs and permission scoping. This MCP comparison guide can help you align stakeholders on criteria, avoid brand bias, and keep decisions evidence based.

Core criteria:

| Criterion | Why it matters | What good looks like |

| Integration surface | Connect models, tools, vectors, and webhooks | Native adapters plus generic SDKs |

| Policy and auth | Prevent data leaks and enforce who can run what | First-class RBAC and secret vaults |

| Observability | Diagnose slow or costly calls fast | Traces, metrics, replay, export |

| Reliability | Keep flows running under real load | Retries, circuit breakers, queues |

Always test with your ugliest workflows, not a vendor’s happy path.

Evaluate reliability, security, and total cost

Benchmarks should mirror production. Run load tests on common tool chains, measure p95 latency, and track tool success rates across hours, not minutes. Inspect sandboxing for tool execution, secrets management, and data egress controls. For cost, model tokens are only part of the story. Include vector operations, storage, logs, and orchestration overhead.

“If you cannot trace a failing tool call to the exact prompt, inputs, and policy in seconds, you will pay that time back in incidents.”

Field checklist:

- p95 latency per tool, end-to-end and cold start

- Tool success rate and retry behavior

- Policy coverage for PII, tenancy, and scopes

- Rollback plan for schema or tool changes

Track emerging trends and roadmap signals

The MCP world is moving quickly toward richer protocols and safer execution. Expect deeper model-agnostic tooling, event-driven actions, and better offline evaluation harnesses integrated directly into the control plane. Vendor roadmaps that emphasize open standards and exportable data usually age better than closed stacks.

Did you know?

Teams report major gains when they standardize tool schemas early, then reuse them across models. Event streams tied to traces reduce time to fix flaky third-party integrations.

Trends to watch:

- Stronger RBAC and policy-as-code for regulated teams

- Built-in evaluators for quality, cost, and regressions

- Edge inference options paired with central policy control

Bring it together

Treat your MCP layer like any other critical platform: define success metrics, run adversarial tests, and keep decisions transparent.

Start with a narrow use case, wire tools behind clear policies, and insist on full tracing and replay from day one.

Use a shared scorecard and align on non-negotiables only to inform, not to replace, your in-house tests. When the platform elevates safety, speed, and clarity at the same time, you will know you picked the right foundation for your AI applications.